Atlassian accidentally DDOS'ed their own password change service.

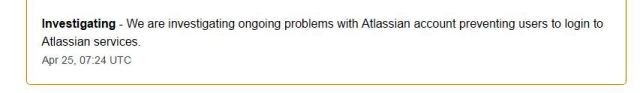

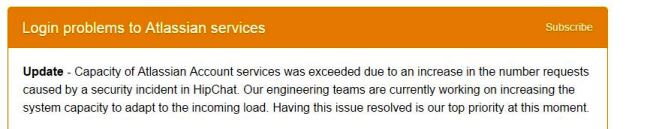

As a company, if you're facing a suspected security breach then forcing your customers and clients to change passwords is not a bad thing to do. However, when you do this don't end up DDOS'ing your own system as Atlassian have apparently done.

If you don't know, Atlassian make the JIRA project management and tracking tool as well as the popular hipchat chat room software and they've recently acquired Trello.

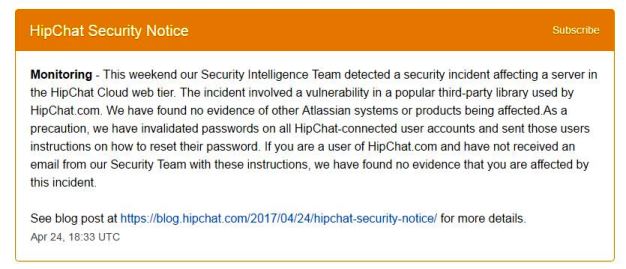

The start of the sorry tale kicks off on Sunday (Click on the images for a clearer view):

The blog article is open, well written and talks about the password storage mechanism which is bcrypt with hashed and salted passwords. All good there then.

The last line is an interesting one though "If you are a user of HipChat.com and have not received an email from our Security Team with these instructions, we have found no evidence that you are affected by this incident." I'll come back to this.

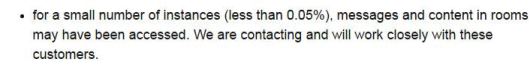

The blog post does admit that customers may have lost data. Something to be aware of with cloud based chat services like hipchat.